Insight

Training for Tough Conversations: How Agentic AI Supports Healthcare Providers

Most clinicians receive less than 15 minutes of formal communication training throughout their careers. Yet they’re expected to lead some of the most emotionally charged conversations in healthcare, including those about serious illness, palliative care, end-of-life care, and what matters most to patients and their families.

Alex Ralevski, a senior data scientist at Tegria, is working with Providence’s Data Science team and the Institute for Human Caring to address this gap by reimagining how we teach communication in clinical settings. Through a novel application of large language models (LLMs) and agentic AI, the team created an emotionally responsive training tool that simulates realistic, high-stakes conversations between patients and providers.

This work represents a new direction in healthcare training—one where technology does not replace human connection, but actually supports deeper and more meaningful human interactions.

We’re creating emotionally realistic training experiences that give clinicians a safe space to practice empathy and improve their communication over time.

ALEX RALEVSKISenior Data Scientist, Tegria

The Challenge: Training That Works, But Doesn’t Scale

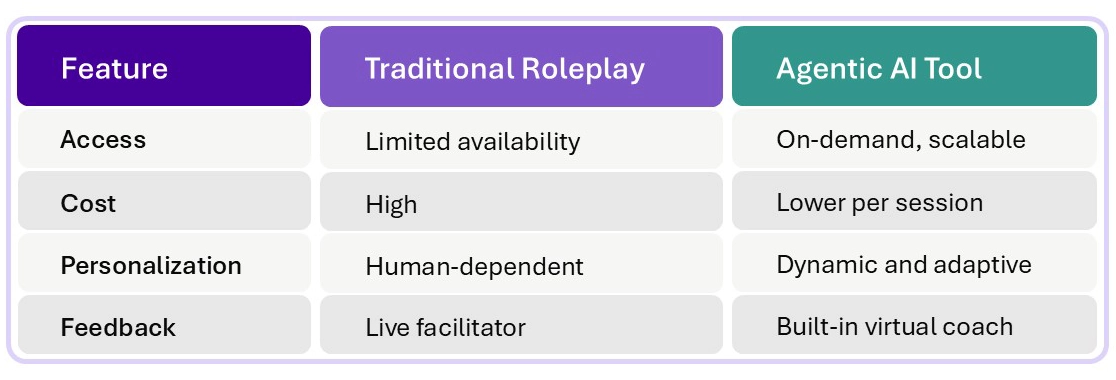

In many healthcare organizations, communication training involves roleplay with standardized patient actors. These sessions are highly effective and often led by expert facilitators, but they are resource-intensive. As a result, only a small percentage of providers have access to this kind of training.

Health systems seeking to expand empathy education face a key challenge: how to scale a highly personalized learning experience. The innovation from Tegria and Providence addresses this by replicating the most impactful part of the training—the live roleplay—in a virtual environment that can reach more clinicians, more often. The result is a prototype that provides scalable, on-demand, realistic empathy training powered by LLMs.

Empathy Training: Traditional Approaches vs. Agentic AI

A Smarter Simulation: How Agentic AI Enhances Realism

The foundation of the tool is a set of detailed patient personas, each grounded in clinical expertise and designed to reflect emotional nuance. These personas interact with clinicians using natural language responses generated by GPT-4o, Open AI’s LLM. The realism of the interaction is powered by an agentic AI architecture: a multi-agent system that introduces greater depth and adaptability into each conversation.

Three agents are involved in each simulation. One agent (the Patient Agent) serves as the AI patient and adjusts its responses based on evolving emotional and cognitive states, including anger, trust, and confusion. Another agent, the Conversation Analysis Agent, monitors the conversation to evaluate the doctor’s sentiment and adherence to the Serious Illness Conversation Guide.

Based on this analysis, the Conversation Analysis Agent updates the emotional and cognitive states of the AI patient to simulate realistic patient responses. The patient’s responses are updated dynamically throughout the conversation in response to how the clinician communicates, just as a real-world conversation might evolve between a doctor and a patient.

For example, a clinician who uses too much medical jargon may cause confusion to rise, while a clinician who listens and reflects the patient’s emotions may build trust. These changes shape how the AI patient responds: brief, guarded replies when trust is low, and more open, expressive responses when trust is high.

The team also introduced a measure called “loquacity,” which controls how much the AI patient speaks based on emotional context and the stage of the conversation. This helps simulate real-world variations in patient behavior.

In addition to the Patient and Conversation Agents, the system includes a third AI agent that acts as a virtual coach. This Coach Agent monitors the conversation and provides real-time feedback and suggestions to the clinician. It might prompt the user to pause, ask an open-ended question, or respond to an emotional cue from the patient.

The Coach Agent helps clinicians stay engaged and learn in the moment. It also replicates some of the mentoring that would typically happen during live training with an experienced facilitator.

A New Direction for Empathetic AI in Healthcare

While many AI tools in healthcare are built to optimize workflows or streamline operations, this project highlights a different use case: supporting the human side of care. By simulating emotionally realistic patient interactions, agentic AI using LLMs provides a new pathway for scalable, flexible, and personalized empathy training.

This approach has potential beyond palliative care. It could be adapted for behavioral health, crisis response, human resources, or any setting where communication and emotional intelligence are critical. Its modular design makes it easy to tailor for different clinical scenarios, training goals, and user groups.

Developing such realism required careful prompt engineering and continuous iteration. The team worked to ensure the AI patient expressed emotions in subtle, authentic ways. This meant avoiding explicit labeling of feelings and instead relying on tone, word choice, and pacing to communicate emotional states, just as a real patient might.

Dive deeper into Alex’s agentic AI work by viewing her presentation for databricks Data + AI Summit, “Agentic Architectures to Create Realistic Conversations: Using GenAI To Teach Empathy in Healthcare.”

What’s Next for This Work

The team is actively exploring future enhancements, including support for additional emotional states like anxiety and sadness, as well as more dynamic voice responses through speech-to-text tools that change as the AI patient’s emotions change. Plans are also underway to enable live coaching conversations between the clinician and the Coach Agent. Automated prompt generation and persona updates are additional areas of focus.

As agentic AI continues to evolve, ongoing collaboration, testing, and refinement will help to further personalize the experience. This adaptability is essential for developing emotionally intelligent training tools that stay responsive to the needs of both clinicians and patients across care settings. By thoughtfully applying agentic AI to support complex communication skills, the team is helping clinicians gain more opportunities to practice empathy in a safe, flexible environment.